Jan Scheuermann, who has quadriplegia, brings a chocolate bar to her mouth using a robot arm she is guiding with her thoughts, while researcher Elke Brown, M.D., watches in the background (credit: UPMC)

Reaching out to “high five” someone, grasping and moving objects of different shapes and sizes, feeding herself dark chocolate.

For Jan Scheuermann and a team of researchers from the University of Pittsburgh School of Medicine and UPMC, accomplishing these seemingly ordinary tasks demonstrated for the first time that a person with longstanding quadriplegia can maneuver a mind-controlled, human-like robot arm in seven dimensions (7D) to consistently perform many of the natural and complex motions of everyday life.

In a study published in the online version of The Lancet, the researchers described the brain-computer interface (BCI) technology and training programs that allowed Ms. Scheuermann, 53, to intentionally move an arm, turn and bend a wrist, and close a hand for the first time in nine years.

Less than a year after she told the research team, “I’m going to feed myself chocolate before this is over,” Ms. Scheuermann savored its taste and announced as they applauded her feat, “One small nibble for a woman, one giant bite for BCI.”

“This is a spectacular leap toward greater function and independence for people who are unable to move their own arms,” agreed senior investigator Andrew B. Schwartz, Ph.D., professor, Department of Neurobiology, Pitt School of Medicine. “This technology, which interprets brain signals to guide a robot arm, has enormous potential that we are continuing to explore. Our study has shown us that it is technically feasible to restore ability; the participants have told us that BCI gives them hope for the future.”

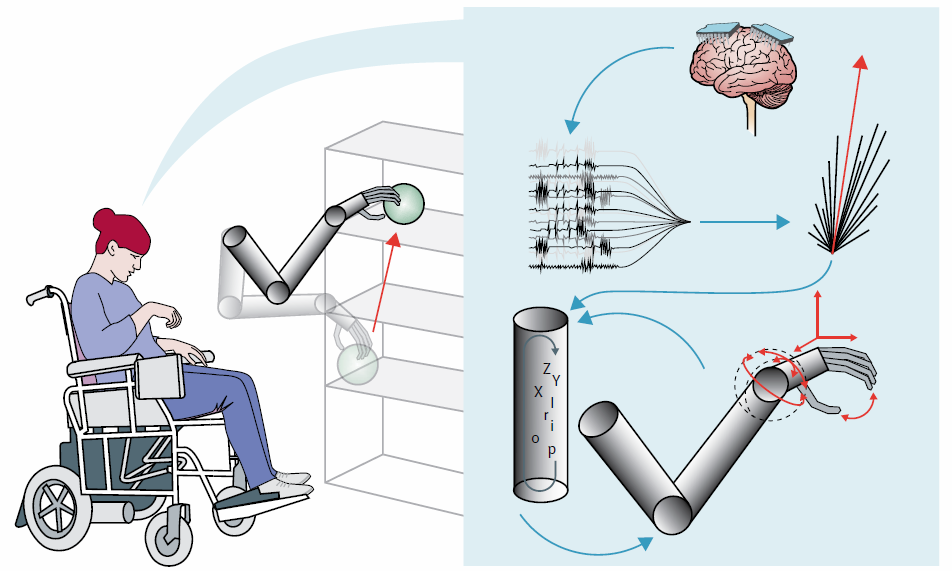

On Feb. 10, 2012, after screening tests to confirm that she was eligible for the study, co-investigator and UPMC neurosurgeon Elizabeth Tyler-Kabara, M.D., Ph.D., assistant professor, Department of Neurological Surgery, Pitt School of Medicine, placed two quarter-inch square electrode grids with 96 tiny contact points each in the regions of Ms. Scheuermann’s brain that would normally control right arm and hand movement.

The electrode points pick up signals from individual neurons and computer algorithms are used to identify the firing patterns associated with particular observed or imagined movements, such as raising or lowering the arm, or turning the wrist, explained lead investigator Jennifer Collinger, Ph.D., assistant professor, Department of Physical Medicine and Rehabilitation (PM&R), and research scientist for the VA Pittsburgh Healthcare System. That intent to move is then translated into actual movement of the robot arm, which was developed by Johns Hopkins University’s Applied Physics Lab.

Two days after the operation, the team hooked up the two terminals that protrude from Ms. Scheuermann’s skull to the computer. “We could actually see the neurons fire on the computer screen when she thought about closing her hand,” Dr. Collinger said. “When she stopped, they stopped firing. So we thought, ‘This is really going to work.’”

7D control

How the neuroprosthetic system works. Two silicon-substrate microelectrode arrays surgically implanted in the motor cortex (upper right) allow recordings of ensemble neuronal activity, which are then translated into intended movement commands. This brain-derived information is conveyed to a shared controller that integrates the participant’s intent, robotic position feedback, and task-dependent constraints. Using this bioinspired brain-machine interface, the paralyzed woman could manipulate objects of various shapes and sizes in a 3D workspace. (Credit: Jennifer L Collinger et al./The Lancet)

Within a week, Ms. Scheuermann could reach in and out, left and right, and up and down with the arm, which she named Hector, giving her 3-dimensional control that had her high-fiving with the researchers. “What we did in the first week they thought we’d be stuck on for a month,” she noted.

Before three months had passed, she also could flex the wrist back and forth, move it from side to side and rotate it clockwise and counter-clockwise, as well as grip objects, adding up to what scientists call 7D control.

In a study task called the Action Research Arm Test, Ms. Scheuermann guided the arm from a position four inches above a table to pick up blocks and tubes of different sizes, a ball and a stone and put them down on a nearby tray. She also picked up cones from one base to restack them on another a foot away, another task requiring grasping, transporting and positioning of objects with precision.

“Our findings indicate that by a variety of measures, she was able to improve her performance consistently over many days,” Dr. Schwartz explained. “The training methods and algorithms that we used in monkey models of this technology also worked for Jan, suggesting that it’s possible for people with long-term paralysis to recover natural, intuitive command signals to orient a prosthetic hand and arm to allow meaningful interaction with the environment.”

Electrocortigraphy (ECoG) study

In a separate study, researchers also continue to study BCI technology that uses an electrocortigraphy (ECoG) grid, which sits on the surface of the brain rather than slightly penetrates the tissue as in the case of the grids used for Ms. Scheuermann.

In both studies, “we’re recording electrical activity in the brain, and the goal is to try to decode what that activity means and then use that code to control an arm,” said senior investigator Michael Boninger, M.D., professor and chair, PM&R, and director of UPMC Rehabilitation Institute. “We are learning so much about how the brain controls motor activity, thanks to the hard work and dedication of our trial participants. Perhaps in five to 10 years, we will have a device that can be used in the day-to-day lives of people who are not able to use their own arms.”

The next step for BCI technology will likely use a two-way electrode system that can not only capture the intention to move, but in addition, will stimulate the brain to generate sensation, potentially allowing a user to adjust grip strength to firmly grasp a doorknob or gently cradle an egg.

After that, “we’re hoping this can become a fully implanted, wireless system that people can actually use in their homes without our supervision,” Dr. Collinger said. “It might even be possible to combine brain control with a device that directly stimulates muscles to restore movement of the individual’s own limb.”

For now, Ms. Scheuermann is expected to continue to put the BCI technology through its paces for two more months, and then the implants will be removed in another operation.

“This is the ride of my life,” she said. “This is the rollercoaster. This is skydiving. It’s just fabulous, and I’m enjoying every second of it.”

The BCI projects are funded by the Defense Advanced Research Projects Agency, National Institutes of Health, the U.S. Department of Veteran’s Affairs, the UPMC Rehabilitation Institute and the University of Pittsburgh Clinical and Translational Science Institute.

For more information about participating in the trials, call 412-383-1355.

How this compares to previous studies

The results of previous work have shown that neural activity can be recorded from the motor cortex and translated to movement of an external device or the individual’s own muscles, the authors say. However, until now, the results of human studies have not shown whether the natural and complex movements can be done consistently for different tasks.

“Here, we have shown that a person with chronic tetraplegia can do complex and coordinated movements freely in seven-dimensional space consistently over several weeks of testing. This study is different from previous studies in which investigators had little control in translation dimensions, used staged control schemes, or had insufficient workspace to complete very structured tasks.

“Increasing dimensional control allows our participant to fully explore the workspace by placing the hand in the desired three-dimensional location and orienting the palm in three dimensions. This study is the first time that performance has been quantified with functional clinical assessments. Although in most human studies only a few days of performance data were reported, we have shown that the participant learned to improve her performance consistently over many days using different metrics.

“By using training methods and algorithms validated in non-human primate work, individuals with long-term paralysis can recover the natural and intuitive command signals for hand placement, orientation, and reaching to move freely in space and interact with the environment.”

No hay comentarios:

Publicar un comentario